Basics of Philosophy: Logic -Introduction | History of Logic | Types of Logic | Deductive Logic | Inductive Logic | Modal Logic | Propositional Logic | Predicate Logic | Fallacies | Paradoxes | Major Doctrines

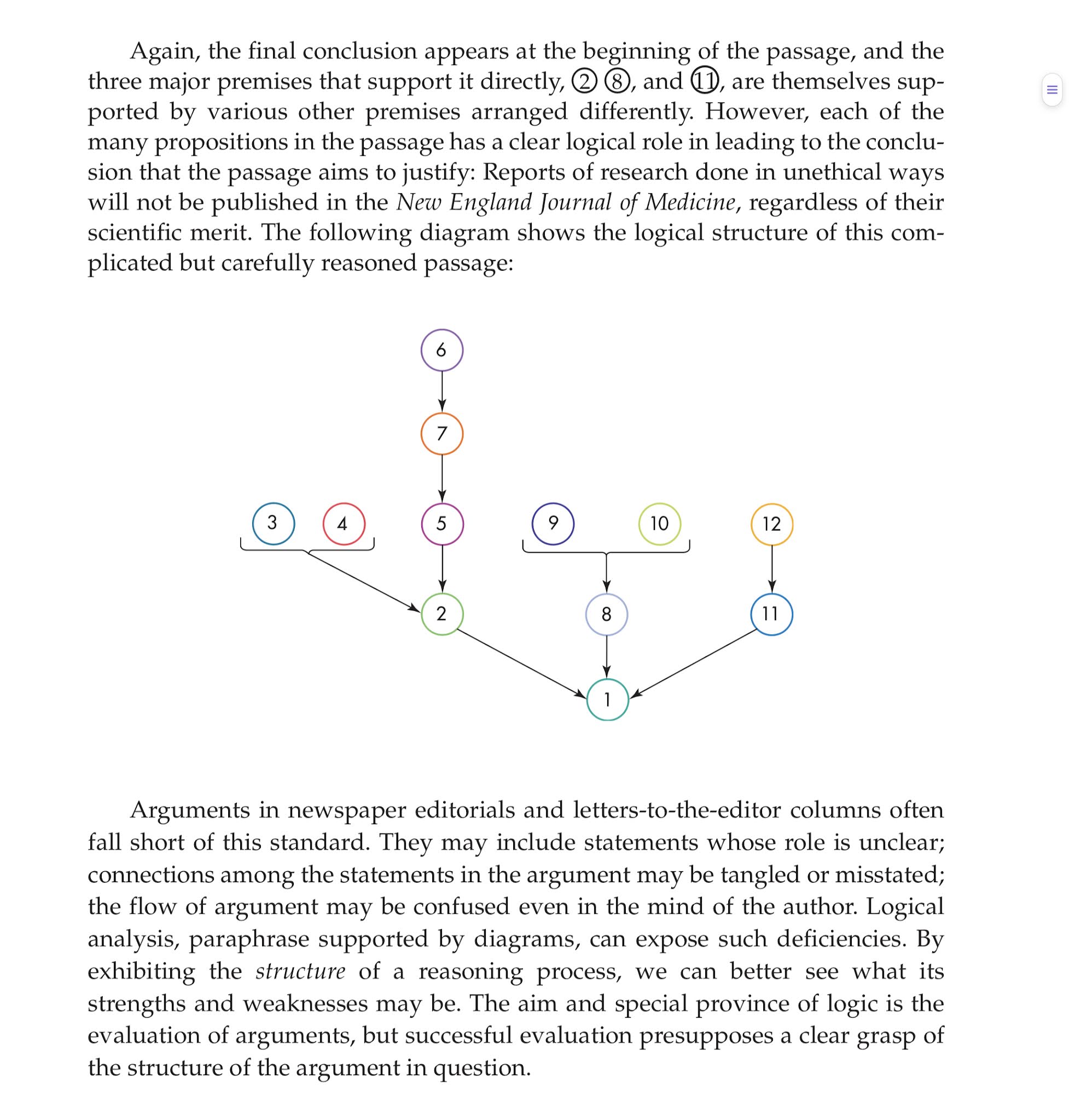

Logic (from the Greek "logos", which has a variety of meanings including word, thought, idea, argument, account, reason or principle) is the study of reasoning, or the study of the principles and criteria of valid inference and demonstration. It attempts to distinguish good reasoning from bad reasoning.

Aristotle defined logic as "new and necessary reasoning", "new" because - it allows us to learn what we do not know - and "necessary" because its conclusions are inescapable. It asks questions like "What is correct reasoning?", "What distinguishes a good argument from a bad one?", "How can we detect a fallacy in reasoning?"

Logic investigates and classifies the structure of statements and arguments, both through the study of formal systems of inference and through the study of arguments in natural language. It deals only with propositions (declarative sentences, used to make an assertion, as opposed to questions, commands or sentences expressing wishes) that are capable of being true and false. It is not concerned with the psychological processes connected with thought, or with emotions, images and the like. It covers core topics such as the study of fallacies and paradoxes, as well as specialized analysis of reasoning using probability and arguments involving causality and argumentation theory.

Logical systems should have three things: consistency (which means that none of the theorems of the system contradict one another); soundness (which means that the system's rules of proof will never allow a false inference from a true premise); and completeness (which means that there are no true sentences in the system that cannot, at least in principle, be proved in the system). - Next

https://www.philosophybasics.com/branch_logic.html

How to Diagram Arguments in Logic;

( https://philosophy.lander.edu/logic/diagram.html )

Abstract: Analyzing the structure of arguments is clarified by representing the logical relations of premises and conclusion in diagram form. Ordinary language argument examples are explained and diagrammed.

-

Argument Defined

Arguments in logic are composed of premises offered as reasons in support of a conclusion. They are not defined as quarrels or disputes.

-

The use of the term “argument” in logic is in accordance with this

precising definition; the term is not used in logic to refer to

bickering or contentious disagreements.

Formal arguments are evaluated by their logical structure; informal arguments are studied and evaluated as parts of ordinary language and interpersonal discourse.

-

The presence of an argument in a passage is discovered by

understanding the author's intention of proving a statement by

offering reasons or evidence for the truth of some other

statement.

Generally speaking, these reasons are presented as verbal reports. The reasons might not always be initially presented in declarative sentences, but in context must have their meaning preserved by translation or paraphrase into a statement or proposition.

Statement or Proposition:

A verbal expression in sentence form which is either true or false (but not both) — i.e., a sentence with a truth value.

-

The use of the term “argument” in logic is in accordance with this

precising definition; the term is not used in logic to refer to

bickering or contentious disagreements.

-

How to Identify the Presence of an Argument

-

There are three main ways of judging the presence of an argument:

-

The author or writer explicitly states the reasons, evidence, justification, rationale, or proof of a

statement.

Example:[1] I conclude the dinosaurs probably had to cope with cancer. These are my reasons: [2] a beautiful bone found in Colorado filled with agate has a hole in its center, [3] the outer layer was eroded all the way through, and [4] this appearance closely matches metastatic bone tumors in humans.

Usually, however, the emphasized phrases, “I conclude” and “These are my reasons” are omitted in the text for stylistic reasons — leaving the structure of the argument to be inferred from the meanings of the statements used and less obvious transitional phrases which might indicate reasons or conclusions.

-

The author uses argument indicators signifying the presence of an argument.

Example:[1] Since the solution turns litmus paper red, [2] I conclude it is acidic, [3] inasmuch as acidic substances react with litmus to form a red color.

In this argument, “since” is being used as a premise indicator and “conclude” is used as a conclusion indicator, and “inasmuch as” is another premise indicator.

-

Ask yourself “What is the author trying to prove in this

passage?” In order to determine whether or not an argument is

present in a passage, it sometimes helps to pose this

question. If an answer is directly forthcoming, then the

passage is most likely an argument.

Despite that, the presence of an argument cannot be always known with certainty; often the purpose of the passage can only be contextually surmised. Establishing the intention of a speaker or writer is sometimes the only determining factor of whether or not an argument is present.

A charitable, and insofar as possible, an impartial conventional interpretation of the context, content, and purpose of the passage should be sought.

-

The author or writer explicitly states the reasons, evidence, justification, rationale, or proof of a

statement.

-

What if indicators are not present in a passage? The identification of arguments without argument indicators

present is achieved by recognizing from the meanings of the

sentences themselves when evidence or reasons are being provided

in support of a concluding statement.

For example, evaluate this passage:“[1] The types of sentences you use are quite varied. [2] I've noticed that your recent essays are quite sophisticated. [3] You have been learning much more about sentence structure.”

Note that if we ask upon reading this passage, “What is being proved?,” then the answer in statement [3] suggests itself as resulting naturally from the first two sentences.

That is, statements [1] and [2] are direct observational evidence giving reasons for the main point (or that which is intended to be proved): namely, the inference to [3] “You have been learning much more about sentence structure.”

So, in the context of a simple argumentative passage, if statements are given as suppositions, observations, or facts without evidence or logical support, those statements are premises. If a statement is given logical or evidential support from another statement or statements, that statement is a conclusion (or subconclusion if the argument is complex).

-

There are three main ways of judging the presence of an argument:

-

How to Analyze Simple Arguments

In order to analyze simple and complex arguments, we will find it useful to construct a diagram of the structure of the argument that details the relations among the various premises and conclusions.

-

The conclusion of one argument can become a premise for another

argument. Thus, a statement can be the conclusion of one argument

and a premise of a following argument — just as a daughter in one

family can become a mother in another family.

For example, consider this “chained argument”:“[1] Because of our preoccupation with the present moment and the latest discovery, [2] we do not read the great books of the past. [2] Because we do not do this sort of reading, and [3] do not think it is important, [4] we do not bother about trying to learn to read difficult books. [5] As a result, we do not learn to read well at all.” [1]

Diagramming the argument illustrates the internal logical structure

more clearly than the written description: “Statement [1] provides

evidence for [2], and [2] together with [3] gives evidence for [4],

and as a result of [4], statement [5] follows with some degree of

probability.”

Diagramming the argument illustrates the internal logical structure

more clearly than the written description: “Statement [1] provides

evidence for [2], and [2] together with [3] gives evidence for [4],

and as a result of [4], statement [5] follows with some degree of

probability.”

-

The number of arguments in a passage is conventionally established

by the number of conclusions in that passage.

-

In analyzing the structure of an argument,

whether simple or complex, the all-important first step is to find the conclusion. Here are some specific suggestions as to how to find the

conclusion.

-

The conclusion might be evident from the content and context of

the paragraph structure. The sequence of sentences is often an

indication of the conclusion. Arrangement of sentences from most

general to specific is a common form of paragraph or passage;

the arrangement of sentences from specific to general is a bit

less common.[2] Considering both cases, the conclusion is often the first or the last sentence in a

passage. [3]

Example argument:[1] John didn't get much sleep last night. [2] He has dark circles under his eyes. [3] He looks tired.

The conclusion is the first sentence in the passage. Statements

[2] and [3] are observational evidence for statement

The conclusion is the first sentence in the passage. Statements

[2] and [3] are observational evidence for statement

[1] which is inferred from those

observations.

-

Nevertheless, the conclusion can occur anywhere

in the paragraph, especially if the passage has not been

revised for clarity. Usually, if a conclusion is not the first or last sentence of an argumentative paragraph, a conclusion indicator is present, or the last sentence is presented as an after-thought with a premise indicator. Frequently used argument indicators are highlighted below under separate headings.

Example Argument:[1] Studies from rats indicate that neuropeptide Y in the brain causes carbohydrate craving, and [2] galanin causes fat craving. [3] Hence, I conclude that food cravings are tied to brain chemicals [4] because neuropeptide Y and galanin are brain chemicals.

-

The structure of the argument can be inferred by attending to

the

premise and conclusion indicators even though the content of

the argument might not be understood.

-

The conclusion might be evident from the content and context of

the paragraph structure. The sequence of sentences is often an

indication of the conclusion. Arrangement of sentences from most

general to specific is a common form of paragraph or passage;

the arrangement of sentences from specific to general is a bit

less common.[2] Considering both cases, the conclusion is often the first or the last sentence in a

passage. [3]

-

The conclusion of one argument can become a premise for another

argument. Thus, a statement can be the conclusion of one argument

and a premise of a following argument — just as a daughter in one

family can become a mother in another family.

-

Working with Premise Indicators

Premise indicators are terms which often indicate and precede the presence of reasons. Frequently used premise indicators include the following terms:for

since

as

because [* when the term means “for the reason that” but not when it means “from the cause of”]

in as much as

follows from

after all

in light of the fact

assuming

seeing that

granted that

in view of

as shown by; as indicated by

given that

inferred from; concluded from; deduced from

due to the fact that

for the reason [* often mistaken for a conclusion indicator]

-

Examples of their use in arguments:

-

“[1] The graphical method for solving a system of equations is

an approximation, [2] since reading the point

of intersection depends on the accuracy with which the lines are

drawn and on the ability to interpret the coordinates of the

point.”

The term “since” indicates that the second clause of this

passage is a premise, the first clause is left as the

conclusion.

The term “since” indicates that the second clause of this

passage is a premise, the first clause is left as the

conclusion.

In practice, the second clause can be broken down into two separate premises so that the argument could have also have been set up as follows:

So under this interpretation, [2a] together with [2b] is evidence for [1].[2a] Reading the point of intersection of a graph depends on the accuracy with which the lines are drawn.

[2b] Reading the point of intersection also depends upon the ability to interpret the coordinate of the point.

[1]Thus, the graphical method for solving a system of equations is an approximation.

-

A simpler argument with a premise indicator:

[1] Questionable research practices are far more common than

previously believed, [2] after all, the Acadia

Institute found that 44 percent of students and 50 percent of

faculty from universities were aware of cases of plagiarism,

falsifying data, or racial discrimination.

[1] Questionable research practices are far more common than

previously believed, [2] after all, the Acadia

Institute found that 44 percent of students and 50 percent of

faculty from universities were aware of cases of plagiarism,

falsifying data, or racial discrimination.

-

“[1] The graphical method for solving a system of equations is

an approximation, [2] since reading the point

of intersection depends on the accuracy with which the lines are

drawn and on the ability to interpret the coordinates of the

point.”

-

Try the following examples for yourself:

-

“[1] It seems hard to prove that the composition of music and words was ever a simultaneous process. [2] Even Wagner sometimes wrote his ‘dramas’ years before they were set to music; [3] and, no doubt, many lyrics were composed to fit ready melodies.” [4]

Although there are no indicators for the first two statements

in this passage, the second two statements are an example

which supports or gives evidence for the first more general

statement. Therefore, the first statement is the conclusion of

the argument.

Although there are no indicators for the first two statements

in this passage, the second two statements are an example

which supports or gives evidence for the first more general

statement. Therefore, the first statement is the conclusion of

the argument.

This is a weak inductive argument: the conclusion is supported by only one example.

-

“[1] Any kind of reading I think better than leaving a blank still a blank, [2] because the mind must receive a degree of enlargement and [3] obtain a little strength by a slight exertion of its thinking powers.”[5]

The premise indicator “because” indicates the first premise.

Note that the “and [3]” before the last clause in this passage

connects clauses of equal standing; so [3] is tacitly

translated to the independent clause:

The premise indicator “because” indicates the first premise.

Note that the “and [3]” before the last clause in this passage

connects clauses of equal standing; so [3] is tacitly

translated to the independent clause:

“[3] [the mind must] obtain a little strength by a slight exertion of its thinking powers.”

The conjunction “and” connects statements of equal status, so the statement following it is also a premise — that leaves the first statement as the conclusion of this argument.

Argument reconstruction of this kind is often done for clearer understanding of the reasoning for purposes of evaluation. (This argument is inductive since the conclusion does not follow with certainty.)

-

-

Examples of their use in arguments:

-

Working with Conclusion Indicators

Conclusion indicators are words which often indicate the statement which logically follows from the reasons given. Common conclusion indicators include the following:thus

therefore

consequently

hence

so

it follows that

proves that

indicates that

accordingly [* an indicator often missed]

implies that; entails that; follows that

this means

we may infer

suggests that

results in

demonstrates that

for this reason; for that reason [* often mistaken for premise indicators]

-

Examples of their use in arguments:

-

[1] No one has directly observed a chemical bond,

[2] soscientists who try to envision such bonds

must rely on experimental clues and their own imaginations.

-

[1] Math grades for teens with bipolar disorder usually drop

noticeably about one year before their condition is

diagnosed, thus [2] probably bipolar disorder

involves a deterioration of mathematical reasoning.

-

[1] Coal seams have been discovered in Antarctica. [2] This means that the climate there was once warmer than it is now.

[3] Thus, either the geographical location of the

continent has shifted or the whole Earth was once warmer than it

is now.

-

[1] No one has directly observed a chemical bond,

[2] soscientists who try to envision such bonds

must rely on experimental clues and their own imaginations.

-

Try the following examples for yourself:

-

“[1] We humans appear to be meaning-seeking creatures who have had the misfortune of being thrown into a world devoid of meaning. [2] One of our major tasks is to invent a meaning sturdy enough to support a life and [3] to perform the tricky maneuver of denying our personal authorship of this meaning. [4] Thus we conclude instead that it [our meaning of life] was ‘out there’ waiting for us.” [6]

The only indicator in the argument is the conclusion

indicator “Thus” in statement [4]. The first two sentences are

divided here into three statements which provide the reasons

for concluding statement [4].

The only indicator in the argument is the conclusion

indicator “Thus” in statement [4]. The first two sentences are

divided here into three statements which provide the reasons

for concluding statement [4].

(It would have been acceptable also to interpret the sentence beginning with [2] as one statement. If this whole argument were related to other arguments, the choice of how to divide up the statements would be made by translations which best match the related ideas.)

The central idea of the passage is that since [the author thinks] life has no intrinsic meaning, to live well we must invent a meaning for our lives and then believe this imposed meaning is genuinely real.

-

”[1] The fact is that circulating in the blood of the organism, a carcinogenic compound undergoes chemical changes. [2] This is, for instance, the case in the liver, which is literally crammed with enzymes capable of inducing all sorts of modifications. [3] So it may well be that cancer is induced not by the original substances but by the products of their metabolism once inside the organism.” [7]

The only indicator is the conclusion indicator “So” in

statement [3]. The first two statements describe a possible

instance of the final generalization [3].

The only indicator is the conclusion indicator “So” in

statement [3]. The first two statements describe a possible

instance of the final generalization [3].

Notice that statement [3] could have been interpreted as two statements:“[3] So it may well be that cancer is induced not by the original substances but [4] [it may well be that cancer is induced] by the products of their metabolism once inside the organism.”

However, in this case, it seems clearer to keep [3] and [4] as one statement since [3] and [4] express one complete thought. Either interpretation is possible; the simpler one is taken here.

-

-

Examples of their use in arguments:

-

Working with Equal Status Indicators

Indicators of Equal Status of Premises or Conclusions: Conjunctives (including some conjunctive adverbs) often indicate equal status of premise or conclusion in connecting clauses or sentences. Noticing these conjuncts is especially helpful in argument analysis. Indicators of clauses of equal status also include certain adverbial clauses, “conditional, concessive, and contrastive terms,” informing of some type of expectation or opposition between clauses:or - (the inclusive “or”, i.e. “either or or both”)

and

in addition

although

despite; in spite of

besides

though

but

yet

however

moreover

nevertheless

not only … but also - (and also the semicolon “;”)

-

If one of the clauses has already been identified as a premise or a

conclusion of an argument, then its coordinating clause is probably

the same type of statement. Check the following examples.

-

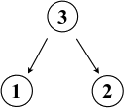

The equal status indicator “and”:

![Argument

diagram shows statements [2] and [3] lead to statement [1]. Argument diagram

shows statements [2] and [3] lead to statement [1].](https://philosophy.lander.edu/logic/images/diagram_23-1.gif) [1] Some students absent today are unprepared for this test, since

[2] the law of averages dictates that only 10% of students are

absent due to illness, and [3] more than 10% are

absent.

[1] Some students absent today are unprepared for this test, since

[2] the law of averages dictates that only 10% of students are

absent due to illness, and [3] more than 10% are

absent.

Comment: Notice that statements [2] and [3] work

Having separate arrows for [2] and [3] leading to conclusion [3]

together as a reason, so both together provide evidence for [1].

would represent a misunderstanding of the argument.

-

The equal status indicator “not only … but

also.”

![Argument diagram

shows premise [1] leads to conclusions [2] and [3]. Argument diagram shows

premise [1] leads to conclusions [2] and [3].](https://philosophy.lander.edu/logic/images/diagram_1-23.gif) [1] Lenses function by refracting light at their surfaces. [2]

Consequently, not only does their action depends

on the shape of the lens surfaces but also [3] it

depends on the indices of refraction of the lens material and the

surrounding medium.

[1] Lenses function by refracting light at their surfaces. [2]

Consequently, not only does their action depends

on the shape of the lens surfaces but also [3] it

depends on the indices of refraction of the lens material and the

surrounding medium.

Comment: Notice that sentence [3] could have been divided into two statements as follows:“ … [3] it depends on the indices of refraction of the lens material and [4] [it depends on] the surrounding medium.”

(Again, how sentences should be divided into different statement depends, to a large degree, on how the argument under analysis is related to any other contextual statements and arguments.)

-

Try the following examples for yourself:

-

“[1] Mystery is delightful, but [2] unscientific, [3] since it depends upon ignorance.” [8]

We could simply consider [1] and [2] as one statement, but

the argument seems be clearer to consider [2] as elliptically

expressing the statement “[2] [Mystery is] unscientific.” The

premise indicator “since” identifies the only premise.

We could simply consider [1] and [2] as one statement, but

the argument seems be clearer to consider [2] as elliptically

expressing the statement “[2] [Mystery is] unscientific.” The

premise indicator “since” identifies the only premise.

-

“[1] Many of those children whose conduct has been most narrowly watched, become the weakest men, [2] because their instructors only instil certain notions into their minds, that have no other foundation than their authority; [3] and if they be loved or respected, the mind is cramped in its exertions and wavering in its advances.” [9]

The premise indicator “because” indicates the first premise

connected by the equal status connector “and” which identifies

the second premise. By elimination, then first independent

clause is the conclusion.

The premise indicator “because” indicates the first premise

connected by the equal status connector “and” which identifies

the second premise. By elimination, then first independent

clause is the conclusion.

-

“[1] For there is altogether one fitness (or harmony). [2] And as the universe is made up out of all bodies to be such a body as it is, [3] so out of all existing causes necessity (destiny) is made up to be such a cause as it is.” [10]

The argument is clearly marked with indicators:

The argument is clearly marked with indicators:

For {1} and {2}, so {3}.

The premise indicator “for” connects another clause of equal standing, with the conclusion marked by the conclusion indicator “so.”

-

-

If one of the clauses has already been identified as a premise or a

conclusion of an argument, then its coordinating clause is probably

the same type of statement. Check the following examples.

-

How to Analyze Complex Arguments

When analyzing complex arguments, it can be helpful to reconstruct the argument by identifying the main conclusion first, and then by working backwards, locate the premises by any premise indicators present.

-

Consider the following argument:

[1] If students were environmentally aware, they would object to the endangering of any species of animal. [2] The well-known Greenwood white squirrel has become endangered [3] as it has disappeared from the Lander campus [4] because the building of the library destroyed its native habitat. [5] No Lander students objected. [6] Thus, Lander students are not environmentally aware.

Note that the following indicators are given in this passage:as

The argument is complex:

because

thus

-

Statement [6] is the final conclusion since it has the conclusion

indicator “thus” and the import of the paragraph indicates that

this statement is the main point of the argument. (It is also the

last sentence in the paragraph.)

-

The premise indicators suggest that [2] is a subconclusion of [3]

since the indicator

The premise indicators suggest that [2] is a subconclusion of [3]

since the indicator

“as” connects them, and [3], in turn, is a subconclusion of [4] since the indicator “because” connects those two

statements.

-

The only statements not yet examined are [1] and [6].

After a bit of thought, the structure of the first statement [1] considered together with statement [5] should suggest itself as a common argument form:[1] If students were environmentally Aware, [then] they would Object to the endangering of any species of animal.

which can be abbreviated as follows:

[5] No student Objected [to the endangering of the Greenwood white squirrel].

The negation of the consequent clause O by the second premise leads us to expect the conclusion “Not A”.[1] If A then O

[5] Not O

Aha! “Not A” is the same thing as the conclusion [6] we identified in step 1:“[6] Thus, Lander students are notenvironmentally Aware,”

(Later in the course we will see that this often used argument structure is termed modus tollens.)

So the diagram of this internal sub-argument then is as follows:

[1] If A then O

[5] Not O

[6] Not A

(Note that “Not A” is the same statement as [6].)

-

Hence the whole argument can now be pieced together as the

following complex argument:

Hence the whole argument can now be pieced together as the

following complex argument:

-

Statement [6] is the final conclusion since it has the conclusion

indicator “thus” and the import of the paragraph indicates that

this statement is the main point of the argument. (It is also the

last sentence in the paragraph.)

-

Caution: In same contexts, the use of indicator words such

as those listed above do not typically indicate the presence of an

argument. For instance, “because” and “so” are used as indicator words

in explanations; “since” and “as” are used in other contexts than

argumentative contexts also.

Consider this passage:“The explanation as to why productivity has slumped since 2004 is a simple one. That year coincided with the creation of Facebook”[11]

The passage here is probably intended to amuse rather than explain why productivity has slumped. The explanation also has cogency as an argument, as well. In cases like this, the import of the passage can only be determined from its context. (Notice that “since” in the above argument is used as a preposition, not as a conjunctive adverb used as a premise indicator.)

So, the presence of argument indicators is not a sure sign an argument is present. Here are two examples of passages where it's doubtful that the writer intended to offer an argument:

-

Literary Implication: For example, in following book

review, two prose images drawn from the work of the poet Stevie

Smith illustrate a literary insight.

Literary Implication: For example, in following book

review, two prose images drawn from the work of the poet Stevie

Smith illustrate a literary insight.

In the passage excerpted below, the emphasized phrase “The implication … is” does not function as an argumentative conclusion indicator. Instead, the prose images are

intended to suggest a meaning beyond the literal interpretation of the events pictured:“In The Voyage of the Dawn Treader, the ship's prow is ‘gilded and shaped like the head of a dragon with wide open mouth’ so when, a moment later, the children stare at the picture ‘with open mouths’, they are being remade in its image … The painted ocean to which Joan is drawn is ‘like a mighty animal’, a ‘wicked virile thing’. The implication in both cases is that art is not safe, and that this is why it's needed.” [emphasis mine] [12]

From a logical point of view, literary implication is a type of imaginative generalization meant to enlighten rather than provide evidence or demonstrate.

-

So it's important to realize that the presence of terms in the

argument indicator lists is not a sure sign the passage is an

argument — these words are also used in other contexts. The use of

these terms are determined within the contexts in which they

appear.

By way of example, consider this passage from the Hindu texts of the Upanishads:

Evaluated superficially, this passage could be analyzed as a circular argument“He asked: ‘Who are the Âdityas?’

Yâgñavalkys replied: ‘The twelve months of the year, and they are Âdityas, because they move along (yanti) taking up everything [i.e., the lives of persons, and the fruits of their work] (âdadânâh). Because they move along, taking up everything, therefore they are called Âdityas.’”[13]

— but in context, the purpose of the passage is merely to define and explain the meaning of the word “Âdityas.”close ×Circular Argument:

Also termed “begging the question” or “petitio principii,” the argumentative fallacy of assuming in a premise the same statement which was to be proved. Formally the argument is valid, but is considered by some logicians fallacious when deceptive.

Links to Diagramming Online Quizzes with Suggested Solutions

Test your understanding with any of the sections for diagramming on

the following quizzes, tests, or exercises:

Quiz: Diagramming Simple Arguments

Problem Set 1 PDF

Problem Set 2 PDF

Problem Set 3 PDF

Test: Structure of Arguments Part I

Peter Mott, review of The Logic of Real Arguments, by Alec Fisher, The Philosophical Quarterly 39 no. 156 (July, 1989), 370-373.

Notes: Diagramming Arguments

1. Mortimer J. Adler, How to Read a Book (New York: Simon and Schuster: 1940), 89. ↩

2. Some English textbooks describe argumentative paragraph structure as deductive (proceeding from general to specific statements or inductive(proceeding from specific to general statements). For example, educator and rhetorician Fred Newton Scott writes:

“There are two orders of progress in thought, one proceeding from the statement of a general principle to particular applications of the principle (deductive reasoning), the other proceeding from the statement of particular facts to a general conclusion from those facts (inductive reasoning). In deductive reasoning, the general principle (stated usually at the beginning) is applied in the particulars; in inductive reasoning the general principle (stated usually at the end) if inferred from the particulars, as a conclusion. In a deductive paragraph, as would be expected, the sentences applying the principle to the particular case in hand, usually follow the topic-statement, which announces the principle. In an inductive paragraph the sentences stating the particular facts usually precede the topic-statement, which gives the general conclusion.” [emphases deleted]

Fred Newton Scott, Paragraph-Writing (Boston: Allyn and Bacon, 1909), 62-63.

Since this

distinction between induction and deduction proves faulty for many arguments, deductive argument are now

described as those that provide total support for their conclusion

(i.e.,a they logically entail the conclusion); whereas, an

inductive argument give partial support for their conclusion

(i.e., they provide only some evidence for the conclusion.)↩

3. Most paragraphs have a three-part structure: introduction (often a topic sentence), body (often supporting sentences), and conclusion (often a summary statement). In argumentative writing, the conclusion of an argument is often the topic sentence or main idea of a paragraph. Consequently, the first sentence or last sentence of many argumentative paragraphs contain the conclusion.↩

4. René Wellek and Austin Warren, Theory of Literature (New York: Harcourt, Brace: 1956), 127.↩

5. Mary Wollstonecraft, Vindication of the Rights of Woman (1792 London: T. Fisher Unwin, 1891), 273.↩

6. Irvin D. Yalom, The Gift of Therapy (New York: Harper Perennial, 2009), 133.↩

7. Maxim D. Frank-Kamenetskii, Unraveling DNA trans. Lev Liapin (New York: VCH Publishers, 1993), 175.↩

8. Bertrand Russell, The Analysis of Mind (London: 1921 George Allen & Unwin, 1961), 40. ↩

9. Wollstonecraft, Vindication, 175.↩

10. Marcus Aurelius, Meditations, trans George Long (New York: Sterling: 2006), 69.↩

11. Nikko Schaff, “Letters: Let the Inventors Speak,” The Economist 460 no. 8820 (January 26, 2013), 16.↩

12. Matthew Bevis, “What Most I Love I Bite,” in the “Review of The Collected Poems and Drawings of Stevie Smith,” London Review of Books 38 No. 15 (28 July 2016), 19.↩

13. Brihadâranyaka-Upanishad in The Upanishads, Pt. II, trans. F. Max Müller in The Sacred Books of the East, Vol. XV, ed. F. Max Müller (Oxford: Clarendon Press, 1900), 141.↩

Readings: Diagramming Arguments

Carnegie Mellon University, iLogos: Argument Diagram Software and User Guide Free software cross-platform. Also, a list with links to other argument diagramming tools.

Martin Davies, Ashley Barnett, and Tim van Gelder, “Using Computer-Aided Argument Mapping to Teach Reasoning,” in Studies in Critical Thinking, ed. J. Anthony Blair (Windsor, ON: Open Monograph Press, 2019), 131-176. Chapter outlining how to use argument mapping software in logic classes. doi: 10.22329/wsia.08.2019

Jean Goodwin, “Wigmore's Chart Method,” Informal Logic 20 no. 3 (January, 2000), 223-243. doi: 10.22329/il.v20i3.2278 Tree diagram method for complex argument representation and inference strength assessment for legal analysis.

Mara Harrell, Creating Argument Diagrams, Carnegie Mellon University. Tutorial on identification of indicators, rewriting statements, providing missing premises, and reconstruction of arguments. (28 pp.)

Dale Jacquette, “Enhancing the Diagramming Method in Logic,” Argument: Biannual Philosophical Journal 1 no. 2 (February, 2011), 327-360. Also here. An extension of the Beardsley diagramming method for disjunctive and conditional inferences as well as other logical structures.

Michael Malone, “On Discounts and Argument Identification,” Teaching Philosophy 33 no. 1 (March, 2010), 1-15. doi: 10.5840/teachphil20103311Discount indicators such as “but”, “however”, and “although” are distinguished from argument indicators, but help in argument identification.

Jacques Moeschler, “Argumentation and Connectives,” in Interdisciplinary Studies in Pragmatics, Culture and Society, eds. Alessandro Capone and Jacob L. Mey (Cham: Springer, 2016), 653-676.

John Lawrence and Chris Reed, “Argument Mining: A Survey,” Computational Linguistics 45 no. 4 (September, 2019), 765-818. doi: 10.1162/coli_a_00364Review of recent advances and future challenges for extraction of reasoning in natural language.

Frans H. van Eemeren, Peter Houtlosser, and Francisca Snoeck Henkemans, Argumentative Indicators in Discourse (Dordercht: Springer, 2007). Sophisticated study of indicators for arguments, dialectical exchanges, and critical discussion. doi: 10.1007/978-1-4020-6244-5

Wikipedia contributors, “Argument Map, Wikipedia. History, applications, standards, and references for argument maps used in informal logic.

(Free) Online Tutorials with Diagramming

Carnegie Mellon University, Argument Diagramming v1.5 (Open + Free). Free online course on argument diagramming using built-in iLogos argument mapping software by Carnegie Mellon's Open Learning Initiative. (With or registration and two weeks to completion).

Harvard University, Thinker/Analytix: How We Argue. Free online course on critical thinking with argument mapping with Mindmup free diagramming software, videos, and practice exercises. (Requires registration and 3-5 hrs. to complete).

Joe Lau, “Argument Mapping ” Module A10 on the Critical Thinking Web at the University of Hong Kong. (No registration and an hour to complete).

Introduction to Logic: Copi, Irving M., Cohen, Carl, McMahon, Kenneth

Baronett - Logic 4e Student Resources

Chapter 5: Categorical Propositions

A. Categorical Propositions

Categorical propositions are statements about classes of things. A class is a group of objects. There are two class terms in each categorical proposition, a subject class and a predicate class.

S = Subject

- The Grammatical Subject: To linguists, this is a purely syntactic position, largely independent of semantics. In English, the subject is identifiable by a number of syntactic and morphological features. Most notably, it’s a noun-phrase in a pre-verbal position. Typically, the subject and verb agree in number. A number of other tests can pinpoint the grammatical subject of a sentence, but the two above are most reliable.

- The Logical Subject: the term “subject” isn’t really used in logic or set theory. (I’ve seen it in literary theory, but that’s a separate usage.) Semanticists and logicians tend to speak instead about individuals or entities.

P = Predicate

- The Grammatical Predicate: amongst linguists, this term has long disappeared from usage. It still lingers in English textbooks, where its definition tends to be muddled. Some textbooks define it in negative terms—it’s every part of the sentence other than the grammatical subject. In practice, such a definition approximates what linguists might call a “verb phrase.”

- The Logical Predicate: this term defies easy definition, but it’s used in set theory and predicate calculus (a logical language). A predicate is a semantic relation that applies to one or more arguments. A one-place predicate would be “(be) green.” A two-place predicate takes two arguments. For example, the two-place predicate “hit” involves both at hitter and the entity being hit. Nouns, verbs, and adjectives all correspond to semantic predicates.

E = No S are P.

I = Some S are P.

O = Some S are not P.

The study of categorical propositions includes the logical structure of individual categorical propositions (how the subject and predicate classes relate to each other), as well as how correct reasoning proceeds from one categorical proposition to another.

There are four types of categorical proposition:

-

A-proposition: Asserts that the entire subject class is included in the predicate class.

- Standard-form of the A-proposition: All S are P.

- This is the universal affirmative proposition.

-

I-proposition: Asserts that at least one member of the subject

class is included in the predicate class.

- Standard form of the I-proposition: Some S are P.

- This is the particular affirmative proposition.

-

E-proposition: Asserts that the entire subject class is excluded from the predicate class.

- Standard-form of the E-proposition: No S are P.

- This is the universal negative proposition.

-

O-proposition: Asserts that at least one member of the subject

class is excluded from the predicate class.

- Standard-form of the O-proposition: Some S are not P.

- This is the particular negative proposition.

B. Quantity, Quality, and Distribution

A categorical proposition is made up not only of a subject class and a predicate class, but also a quantifier (universal or particular) and a copula (conjugation of the verb, “to be,” which usually appears as “are” and “are not.” The quantifier tells us how much of the subject class is included in or excluded from the predicate class, while the copula joins the subject-predicate elements together.

We have seen that the categorical proposition can be viewed in terms of the quantity of the subject class’s relation to the predicate class, but we also consider the quality of that relation, that is, whether or not the relation is affirmed (“All S are P,” and “Some S are P”) or negated (“No S are P,” and “Some S are not P”).

To understand the relation between the subject class and the predicate class, we need to know not only the quantity and quality of the proposition but also whether a class is distributed. We say a class is distributed when the entirety of the class is included in or excluded from the other class:

- All Sd are Pu. The subject term is distributed in a universal affirmative proposition because the entirety of the subject class is included in the predicate class. Since the scope of the universal affirmative proposition covers only the subject class, there is no distribution of the predicate term.

- Some Su are Pu. Neither the subject nor predicate term is distributed. This is because the scope of the quantifier does not cover the entirety of either class.

- No Sd are Pd. Both the subject and predicate terms are distributed. This is because, by definition, when the entirety of the subject class is excluded from the predicate class, the converse is also the case.

- Some Su are not Pd. The subject class is excluded from the entire predicate class. This means that the entirety of the predicate class excludes from itself at least one member of the subject class.

C. The Square of Opposition

Two categorical propositions are in opposition when they share subject and predicate classes but have different quantities or qualities (or both). The square of opposition shows us the logical inferences (immediateinferences) we can make from one proposition type (A, I, E, and O) to another.

- If A is true, then E is false, I is true, O is false;

- If E is true, then A is false, I is false, O is true;

- If I is true, then E is false, A and O are indeterminate;

- If O is true, then A is false, E and I are indeterminate;

- If A is false, then O is true, E and I are indeterminate;

- If E is false, then I is true, A and O are indeterminate;

- If I is false, then A is false, E is true, O is true;

- If O is false, then A is true, E is false, I is true.

Two propositions are said to be contradictories when both cannot be true at the same time and both cannot be false at the same time. A and O propositions are contradictory, and E and Ipropositions are contradictories:

Take the proposition, “All beagles are dogs.” If true, then it’s impossible for the proposition, “Some beagles are not dogs,”to be true, and vice-versa. Now consider the proposition, “No dogs are beagles.” If false, then it must be the case that the proposition, “Some dogs are beagles,” is true, and vice versa.

Universal propositions are said to be contraries because they cannot both be simultaneously true. That means that if the A-proposition is true, the E-proposition must be false. If the E-proposition is true, then the A-proposition is false. It is important to remember that we cannot infer anything about a universal proposition when we know that it is false. This is because it is possible for both universal propositions to be simultaneously false: “All animals are dogs,” and “No animals are dogs.”

Now consider the two particular claims. These are subcontraries. This means that they cannot both be simultaneously false: “Some novels are not books,” and “Some novels are books.” But they can both be simultaneously true: “Some books are not novels,” and “Some books are novels.” Therefore, an inference to a subcontrary is valid when and only when we know the premise to be false.

Lastly, universal-to-particular and particular-to-universal inferences are called subalternation. This sort of inference is made only between propositions of the same quality, i.e., A to I(and vice-versa), and E to O (and vice versa). These inferences are valid as follows: If the superaltern (A or E) is true, then the subaltern (I or O) is true. If the subaltern is false, then the superaltern is false. Notice that no inference can be made about the subaltern if the superaltern is false:

- “No dogs are animals,” and “Some dogs are not animals.”

- “No killers are murderers,” and “Some killers are not murderers.”

- “All killers are murderers,” and “Some killers are murderers.”

- “All dogs are cats,” and “Some dogs are cats.”

Similarly, no inference can be made if the subaltern is true:

- “Some dogs are not beagles,” and “No dogs are beagles.”

- “Some dogs are not cats,” and “No dogs are cats.”

- “Some animals are dogs,” and “All animals are dogs.”

- “Some dogs are animals,” and “All dogs are animals.”

D. Conversion, Obversion, and Contraposition

Conversion, obversion, and contraposition are further immediate inferences we can make between one categorical proposition and another. Here, the inference involves the placement of the subject and predicate classes, not just an inference from one proposition type to another.

Conversion is valid for E- and I-propositions. Conversion by limitation is valid for A-propositions. Conversion is never valid for O-propositions.

Conversion step for E- and I-propositions:

- Change the places of the subject and predicate classes: “S are P” becomes “P are S.”

Conversion steps for A-propositions:

- Change the universal affirmative quantifier to a particular affirmative quantifier: “All,” becomes “Some.”

- Change the places of the subject and predicate classes: “S are P” becomes “P are S.”

Obversion is valid for all four categorical proposition types.

Obversion steps:

- Change the quality of the proposition (affirmative to negative, or negative to affirmative).

- Replace the predicate term with its complement(“non-”). The complement is the set of objects that do not belong to a given class.

Contraposition is valid for A- and O-propositions. Contraposition by limitation is valid for E-. Contraposition is never valid for I-propositions.

Contraposition steps for A- and O-propositions:

- Change the places of the subject and predicate classes: “S are P” becomes “P are S.”

- Replace the subject and predicate classes with their term complements.

Contraposition steps for E-propositions:

- Change the E-proposition to its corresponding O-proposition.

- Change the places of the subject and predicate classes: “S are P” becomes “P are S.”

- Replace the subject and predicate classes with their term complements.

E. Existential Import

There are two ways to think about the subject class of a universal claim, be it universal affirmative or universal negative. One involves what is called existential import. When we assume that the subject class does in fact contain at least one member, we say that claim has existential import. Consider the following two classes of things: dogs and leprechauns. We say that the dog class does in fact contain at least one member, while we do not say the same thing about leprechauns. This means that we do not presuppose there is an object called “leprechaun,” but we do presuppose there is an object called “dog.”

The traditional square of opposition, which represents contradictories, contraries, subcontraries, and subalternation, assumes existential import. That assumption poses certain problems for inferences involving propositions with subject classes we know are empty, e.g., leprechauns. For example, if the subject class of a universal affirmative proposition is empty (“All leprechauns are gold-hoarders”), the proposition is false. We would think its contradictory (“Some leprechauns are gold-hoarders”) must therefore be true. But since the subject class is empty, this particular affirmative proposition is also false.

F. The Modern Square of Opposition

The Modern Square of Opposition is a revised interpretation of the universal proposition’s subject class. This interpretation does not assume existential import. The Venn diagram pictures this status by way of circles and shaded areas:

All S are P:

We interpret shading as designating emptiness. Since the area of S outside of P is shaded, it is empty. Hence, whatever S-objects there might be, are in the P class. Notice we are not saying there is even one S-object, just that if there are any, they’re all in the P class. Since shading designates emptiness, we can see there is nowhere in the S class outside of P for an S-object to exist. This is a visual representation of a lack of existential import. The same principle apples to the universal negative proposition:

No S are P:

The Venn diagram can also be made for the two particular claims, with an x representing existence:

Some S are P:

Some S are not P:

Notice that the I-proposition contradicts the E-proposition, and vice-versa. The O-proposition contradicts the A-proposition, and vice-versa. Contradictories are the only valid inferences possible in the Modern Square of Opposition.

G. Conversion, Obversion, and Contraposition Revisited

Under the modern interpretation, conversion is valid only for E-and I-propositions:

- “No S are P” is logically equivalent to “No P are S.”

-

“Some S are P” is logically equivalent to “Some P are S.”

Under the modern interpretation, obversion is valid for all four proposition types:

- “All S are P” is logically equivalent to “No S are non-P.”

-

“No S are P” is logically equivalent to “All S are non-P.”

-

“Some S are P” is logically equivalent to “Some S are not non-P.”

-

“Some S are not P” is logically equivalent to “Some S are non-P.”

Under the modern interpretation, contraposition is valid only for A- and O-propositions:

-

“All S are P” is logically equivalent to “All non-P are non-S.”

-

“Some S are not P” is logically equivalent to “Some non-P are not non-S.”

H. Venn Diagrams and the Traditional Square

Because the traditional square of opposition assumes existential import, the Venn diagram for a universal claim will reflect the existence of at least one member of the subject class:

We can see from this type of diagram that the inferences valid for the traditional square of opposition are those with diagrams that mirror each other:

I. Translating Ordinary Language into Categorical Propositions

Ordinary language rarely presents categorical propositions in standard-form. We must take pains, then, to interpret a given ordinary language proposition so that we accurately translate it into standard-form. The following are ways to organize our thinking about how to construct a standard-form categorical proposition (quantity, quality, subject class, copula, predicate class):

-

Missing Plural Nouns

- Since every categorical proposition involves two classes of objects, it is important to identify two nouns.

-

Nonstandard Verbs

- Since every categorical proposition involves a copula, it is important to identify and replace the connecting verb with “are.”

-

Singular Propositions

- Propositions about individuals are always translated as universal propositions.

-

Adverbs and Pronouns

- Since every categorical proposition involves a quantifier, it is important to identify and replace adverbs that describe places or timesas reflecting quantity.

- Since every categorical proposition involves two classes of objects, it is important to identify and replace unspecified nouns.

-

“It Is False That…”

- Since there are two ways a categorical proposition can be negative (“No S are P,” and “Some S are not P”), it is important to identify and rewrite phrases expressing negation.

-

Implied Quantifiers

- Since every categorical proposition involves a quantifier (universal or particular), it is important to make quantifiers explicit.

-

Nonstandard Quantifiers

- Since every categorical proposition involves a quantifier (universal or particular), it is important to rewrite the quantifier in standard-form.

-

Conditional Statements

- “If…then” statements should be rewritten as universal categorical propositions, with the phrase after “if” appearing on the left side of the copula, and the phrase after “then”(expressly stated or implied) appearing on the right side of the copula.

-

Exclusive Propositions

- All exclusive propositions should be rewritten so that the exclusive category appears in the predicate position.

-

“The Only”

- All expressions of “the only” should be rewritten so that the class appearing after “the only” is expressed as the subject class of the categorical proposition.

-

Propositions Requiring Two Translations

- Since every categorical proposition contains two and only two classes of things, whenever an ordinary language proposition contains more than two classes of object, there will be more than one categorical proposition.

Chapter 6: Categorical Syllogisms

A. Standard-Form Categorical Syllogisms

A categorical syllogism is an argument containing three categorical propositions: two premises and one conclusion. The most methodical way to study categorical syllogisms is to learn how to put them in standard-form, which looks like:

Major premiseMinor premise

Conclusion

As you can see, in order to put an argument in standard-form, you need to know the major premise and minor premise. The major premise contains the major term. The major term is the predicate of the conclusion. The minor premise contains the minor term. The minor term is the subject of the conclusion:

Major premise (contains the major term)Minor premise (contains the minor term)

Minor term, copula, major term

Notice that the major and minor terms have nothing to do with quantity or quality. Moreover, in the major premise, the major term may appear in the subject position, or it may appear in the predicate position. The same is true for the minor term.

The premises also contain the middle term. This term does not appear in the conclusion, but it appears once in each premise:

Major premise (contains the major term and the middle term)Minor premise (contains the minor term and the middle term)

Minor term, copula, major term

B. Diagramming in the Modern Interpretation

Whereas individual categorical propositions contain two classes of things, a categorical syllogism contains three classes. That means that we use three circles to create a Venn diagram for a categorical syllogism:

When you diagram a categorical syllogism, the goal is to see whether the premises support the conclusion to yield a valid argument. To test a categorical syllogism by way of a Venn diagram involves diagramming only the premises. Once you diagram the premises, you look to see if the conclusion appears. If not, the argument is invalid.

Steps for diagramming the premises of a categorical syllogism in the modern interpretation:

- If one of the premises is a universal proposition, diagram it first. (If both premises are universal, it does not matter which one you diagram first.) This is because you want to eliminate any place where an x, which represents a particular proposition, cannot go.

- Diagram the premise without regard to the third circle, since this is not relevant to the premise at issue.

- Place an x only in an area where it is possible for there to be an object.

- If it is not clear where an x is to be placed, it should straddle the line connecting two circles:

- Never place an x on a portion of a line that does not relate two and only two circles.

C. Diagramming in the Traditional Interpretation

The only difference between diagramming a categorical syllogism in the traditional interpretation and diagramming a categorical syllogism in the modern interpretation is that since the former assumes existential import, any diagram of a universal proposition will include an x:

We can tell from the shading and encircled x’s that we have a universal affirmative (“All M are P“) and a universal negative (“No M are S,“ or perhaps “No S are M“).

D. Mood and Figure

We know that each categorical syllogism contains a major premise, a minor premise, and a conclusion. We also know that there is a middle term in each of the premises. When the argument is in standard-form, this middle term can appear in four possible ways, reflecting the figure of the syllogism:

MP PM MP PM

SM SM MS MS

SP SP SP SP

We also know that each proposition in a categorical syllogism in standard-form will have a specific quantity. The premises and conclusion can all be A-propositions, for example. Since there are 256 permutations of the categorical syllogism, each reflecting a different figure, which is the proposition type for each of the standard-form categorical syllogism’s quantity possibilities.

Together, the mood and figure tell us everything we need to know in order to test a standard-form categorical syllogism for validity.

E. Rules and Fallacies - (more on the 6 Rules of Syllogisms)

There are six rules a standard-form categorical syllogism must meet in order to be valid. If it fails to meet any one of these rules, it is invalid. Each rule has an accompanying fallacy that alerts us to the specific way in which a categorical syllogism can be invalid.

-

The middle term must be distributed at least once.

-

If a term is distributed in the conclusion, it must also be distributed in its corresponding premise.

-

A categorical syllogism cannot have two negative premises.

-

A negative premise must have a negative conclusion.

-

A negative conclusion must have a negative premise.

-

Two universal premises cannot have a particular conclusion.

Rule 1: We understand the concept of distribution, from Chapter 5, as referring to the entirety of a class being included in, or excluded from, another class. We know that universal propositions distribute the subject term, while negative propositions distribute the predicate term. When the middle term is not distributed in either premise, the argument commits the fallacy of undistributed middle.

Rule 2: A term does not have to be distributed in the conclusion, but if it is, find its corresponding premise. The corresponding premise is the one that contains the term or terms (minor or major—or both) distributed in the conclusion. For example, if the conclusion is an E-proposition, both the subject and predicate terms are distributed. These terms must also be distributed in their respective premise in order for the rule to be met. If the rule is broken, the argument commits the fallacy of illicit major or the fallacy of illicit minor.

Rule 3: If the premises are both negative, there is no way to establish a relation between the major and minor terms. When this rule is broken, the argument commits the fallacy of exclusive premises.

Rule 4: Rule 3 tells us that we cannot have two negative premises and still have a valid argument. This does not mean, however, that we can never have a negative premise. The only requirement in this case is that the conclusion also be negative, so that the relation between major and minor terms can be established. When this rule is broken, the argument commits the fallacy of affirmative conclusion/negative premise.

Rule 5: This rule is the converse of Rule 4. Rule 3 tells us that we cannot have two negative premises and still have a valid argument. This does not mean, however, that we can never have a negative conclusion The only requirement in this case is that one premise also be negative, so that the relation between major and minor terms can be established. When this rule is broken, the argument commits the fallacy of negative conclusion/affirmative premise.

Rule 6: Recall that, in the modern interpretation, a universal categorical proposition does not assume a member of the subject class exists. Therefore, if a categorical syllogism’s conclusion is particular, we have no way of establishing the member of the subject class. An argument that breaks this rule commits the existential fallacy. (Note: In the traditional interpretation, the argument is provisionally valid. If the subject class in the conclusion does not denote actually existing objects, the syllogism is invalid.)

F. Ordinary Language Arguments

Ordinary language arguments can be analyzed either by Venn diagram or the rules of the syllogism. First, however, several guidelines must be followed:

1. If there are more than three classes of objects (three terms) in the argument, the terms must be reduced.

2. Eliminate superfluous words to reveal the categorical structure, quantity, and quality of the argument.

3. Identify synonyms and replace them with the terms appearing elsewhere in the argument.

4. Use conversion, obversion, and contraposition to begin the process of rewriting the argument in standard-form.

5. Eliminate prefixes as needed.

G. Enthymemes

We saw in Section F that some categorical arguments contain too many terms. There are also arguments, called enthymemes, which are incomplete. That is, the argument may contain only one premise and a conclusion, or only two premises, and so forth. In these cases, the goal is to make the argument complete, so that it can be rewritten as necessary in standard-form.

H. Sorites

Still another type of incomplete argument (enthymeme) is the sorites. This is a chain* of premises that lack intermediate conclusions. Here again, the goal is to establish a complete categorical syllogism that can be tested for validity. If any syllogism in the chain is invalid, the sorites is invalid.

The first step in the process is to rewrite the argument so that the premises appear one on top of another, with a line demarcating the chain of premises from the conclusion:

The first two premises are used to yield an intermediate conclusion, which then becomes a premise in the next sequence:

Conclusion

*We say the reasoning forms a chain because there are repeated terms that link two premises together.

Chapter 7: Propositional Logic

We already know that every statement is a sentence that is either true or false. That is, every statement is a sentence with a truth value. The concept of a truth value is at the core of this chapter. Propositional logic involves the study of how complex statements are assembled and disassembled, as well as how we can replace one statement with another that is logically equivalent to it. The following concepts are key to understanding the systems presented in this chapter, as well as in Chapter 8 and Chapter 9: simple statement, compound(or complex) statement, and truth function.

A. Logical Operators and Translations

There are two types of statement: simple and compound. A simple statement is one that does not contain another statement as a component. Another way to describe a simple statement is to say that it contains a subject and a verb. It can also contain dependent clauses, but the basic idea is that the smallest grammatical unit has a truth value. In the notation of symbolic logic, these statements are represented by capital letters A–Z.

A compound statement contains at least one simple statement as a component, along with a connective. There are five connectives: negation, conjunction, disjunction, conditional, and biconditional. In the notation of symbolic logic, these connectives are represented by operators:

Ordinary language is translated into symbolic logic notation using the aforementioned capital letters A–Z. In general we translate connectives as follows:

When translating complex statements from ordinary language into symbolic logic notation, isolate the simple sentences and the connectives.

B. Compound Statements

Here are four rules to help organize your thinking about translating complex statements:

1. Remember that every operator except the negation is always placed in between statements.

2. Tilde is always placed to the left of whatever is negated.

3. Tilde never goes, by itself, in between two statements.

4. “Parentheses, brackets, and braces are required in order to eliminate ambiguity in a complex statement” (p. 297). Consider any type of parenthetical a means of grouping together as a single unit a complex statement as part of a larger complex statement.

Every compound statement consisting of simple statements and two or more operators is governed or driven by a main operator. There is always only one main operator in any compound statement, and that operator is either one of the four that appear in between statements or the tilde that appears in front of the statement that is negated.

C. Truth Functions

We know that a statement has truth values: it is either true or false:

A compound statement also has truth values. These are functions of the simple statements in combination with the meaning of a given operator:

Negation tells us, “It is not the case that…”

Conjunction tells us, “Both…are the case.”

Disjunction tells us that, “At least one is the case…”

Conditional mirrors the concept of validity: If the premises are true, the conclusion cannot be false. When the premises are true and the conclusion is false, the inference is invalid.

The biconditional tells us that, “Either both are the case, or neither is…”

D. Truth Tables for Propositions

When we construct a truth table to determine the possible truth values of a given statement, we first need to know at least two things:

- The number of simple statements that are in the compound statement. The number of rows of the truth table follows this formula: R = 2number of simple statements.

- The main operator. Any statement containing two or more operators will be calculated according to an order of operations. The main operator determines the final truth values of the statement, and as such its values are calculated last.

When truth values are provided, we calculate to the main operator using the same order of operations as when we are calculating all possible truth values. If you are told that P, Q, and R have values T, F, and T, respectively, you can calculate the value of the compound statement, P ⊃ (Q • R):

E. Contingent and Noncontingent Statements

A contingent statement is true* on at least one row of the truth table, and false on at least one row of the truth table. In other words, such a statement is sometimes true and sometimes false. A noncontingent statement is not dependent on the truth values of the component parts. Such a statement is either always true (a tautology) or always false (self-contradictory).

F. Logical Equivalence

Two or more statements are logically equivalent when they have the same values* on each row of the truth table. We will see in Chapter 8 that the replacement rules are based on such equivalences. Truth tables for the following pairs of statements will reveal their logical equivalence. They are just a few of myriad truth-functionally equivalent statements:

P ⊃ Q and ~ P v Q

P ⊃ Q and ~ Q ⊃ ~ P

~ (P • Q) and ~ P v ~ Q

G. Contradictory, Consistent, and Inconsistent Statements

Two statements are contradictory when they are never true or never false* at the same time on the same row of the truth table.

Two or more statements are consistent when they are true* at the same time on at least one row of the truth table.

Two or more statements are inconsistent when they are not true* at the same time on even one row of the truth table.

*Under the main operator.

H. Truth Tables for Arguments

We have seen that truth tables can be constructed for individual statements and pairs of statements. The truth table allows us to classify statements according to their truth values under the main operator. The same can be done for arguments in order to determine validity or invalidity.

A truth table in which there is even one row of the argument on which the premises are all true while the conclusion is false is invalid. A truth table in which there is not even one row of the argument on which the premises are all true while the conclusion is false is valid.

I. Indirect Truth Tables

The indirect truth table is a powerful and expedient tool for testing an argument’s validity:

- Assume the premises are true and the conclusion is false. In other words, assume the argument is invalid. This involves placing a T under the main operator of each of the premises, and an F under the main operator of the conclusion. If any of the statements is simple, the T or the F is placed directly beneath it.

- Based on the truth definitions for each of the operators, calculate backward from the assumed value to the statement’s constituent elements.

- If you can calculate to the constituent elements without contradicting the truth definitions for the given operator, the argument is invalid.

- If you cannot calculate to the constituent elements without contradicting the truth definitions for the given operator, the argument is valid.

Chapter 8: Natural Deduction

A. Natural Deduction

The system of natural deduction is a specific proof procedure based on the truth definitions of the logical operators, ~, •v, ⊃, and ≡. This system uses implication rules, which are valid argument forms, to justify each step in the derivation of a valid argument’s conclusion. This system also uses replacement rules, which are pairs of logically equivalent statements, to aid in the derivation of a valid argument’s conclusion.

It is important to note that implication rules are applicable only to entire lines in a proof, rather than portions of a line. Replacement rules can, however, be used on a portion of a line in a proof.

B. Implication Rules I

Modus ponens (MP), modus tollens (MT), hypothetical syllogism (HS), and disjunctive syllogism (DS) make up the first four implication rules in this system of natural deduction:

MP

P ⊃ QP

Q

MT

P ⊃ Q~ Q

~ P

HS

P ⊃ QQ ⊃ R

P ⊃ R

DS

P v Q~ P

Q

Each implication rule involves dismantling an operator (horseshoe and wedge). In the case of HS, the horseshoe’s elements are rearranged. It is also worth noting that each of these rules involves drawing an inference from two statements.

C. Tactics and Strategy

Strategy is the overall approach you take to setting up a proof. This will be increasingly important to think about when the arguments become more complex. Tactics are small-scale maneuvers—they are currently what you’re using to work your way through proofs at this point in your skill set. In other words, rules like MP and DS are tactics.

The following strategies will help you form the foundation for solving more complicated proofs later on:

- Simplify and isolate using the following tactics: MP, MT, and DS.

- Look for negation using the following tactics: MT and DS.

- Look for conditionals using the following tactics: MP, MT, HS.

- Look at the conclusion and work backward using the following tactic: anticipate what you need to reach the conclusion by looking for the line that should immediately precede it.

D. Implication Rules II

Constructive dilemma (CD), simplification (Simp), conjunction (Conj), and addition (Add) make up the second four implication rules in this system of natural deduction:

CD

(P ⊃ Q) • (R ⊃ S)P v R

Q v S

Simp

P • QP

Conj

PQ

P • Q

ADD

PP v Q

As with the first four implication rules, the second four involve assembling or disassembling statements according to their main operators’ truth definitions.

The following strategies will help you form the foundation for solving more complicated proofs later on:

1. Simplify and isolate using the following tactics: MP, MT, DS, and Simp.

2. Look for negation using the following tactics: MT and DS.

3. Look for conditionals using the following tactics: MP, MT, HS, and CD.

4. Look at the conclusion and work backward using the following tactic: anticipate what you need to reach the conclusion by looking for the line that should immediately precede it.

5. Add whatever you need using the following tactic: Add.

6. Combine lines by using the following tactic: Conj.

E. Replacement Rules I

Both sets of replacements rules (Sections E and F) enlist the principle of replacement, which states that logically equivalent expressions may replace each other within the context of a proof. Replacement rules can be used either on a part of a line or an entire line in a proof.

DeMorgan (DM), commutation (Com), association (Assoc), distribution (Dist), and double negation (DN) are sets of equivalent statements, one of which can be substituted for the other in a proof. Replacement rules aid you in assembling or disassembling statements.

The following strategies will help you form the foundation for solving more complicated proofs later on:

- Simplify and isolate using the following tactics: MP, MT, DS, and Simp.

- Look for negation using the following tactics: MT, DS, DM, and DN.

- Look for conditionals using the following tactics: MP, MT, HS, and CD.

- Look at the conclusion and work backward using the following tactic: anticipate what you need to reach the conclusion by looking for the line that should immediately precede it.

- Add whatever you need using the following tactic: Add.

- Combine lines by using the following tactic: Conj.

- Regroup lines by using the following tactics: Com, Assoc, Dist.

F. Replacement Rules II

Transposition (Trans), material implication (Impl), material equivalence (Eqiv), exportation (Exp), and tautology (Taut) make up the last set of replacement rules. The last set of strategies and tactics for implication and replacement rules combines the previous strategies and tactics and adds some more:

- Simplify and isolate using the following tactics: MP, MT, DS, and Simp.

- Look for negation using the following tactics: MT, DS, DM, DN, Trans, and Impl.

- Look for conditionals using the following tactics: MP, MT, HS, CD, Impl, Equiv, and Exp.

- Look at the conclusion and work backward using the following tactic: anticipate what you need to reach the conclusion by looking for the line that should immediately precede it.

- Combine lines by using the following tactic: Conj.

- Add whatever you need using the following tactic: Add.

- Regroup lines by using the following tactics: Com, Assoc, Dist, and Taut.

G. Conditional Proof

Conditional Proof allows you to prove a horseshoe statement by assuming its antecedent and deriving its consequent. It’s a veridical proof that says, “If I assume P and derive from it Q, then I can say,‘P ⊃ Q.’ ”

Conditional proof is a good strategy to use for setting up the framework of a proof, particularly if the conclusion of the argument is a conditional claim. As with all assumptive proofs, the assumption must be “discharged,” which is to say, you must get out of the subproof sequence and back onto the main line. This is accomplished by achieving the goal of the subproof.

H. Indirect Proof